Innovation in Search is far from asymptoting. I think we are going to see a lot of exciting next steps in Web Search in the coming years. There is a series of bets getting made on what the next disruptive step would be.

*User generated content (data generated by twitter, facebook, delicious, youtube etc)

*Personalization (disambiguate user intent better, shorter queries etc)

*Real time Search (fresher search, search twitter etc)

*Size (search through more documents, indexing the deep web, other data formats etc)

*Semantics (better understanding of documents, queries, user intent etc)

are all getting a lot of attention & investment.

This post is a classification of classification of Search Engines. Whenever I hear of a new search engine I subconsciously try to classify it based on a set of critera and it helps me see it in the context of its neighbors in that multidimensional space :). In this post I wanted to touch upon some of those criteria. Although this is a classification(classification) of Search Engines, I am being intentionally sloppy and have written this mainly from the context of the dimensions along which one can innovate in Search. For eg. for category 5. (visualization) one of the classes is the default paradigm of 10 blue links that I have not bothered to note. The goal here is to look at the search landscape and see the dimensions along which search upstarts are challenging the old guard. Examples are suggestive and not comprehensive.

1.By type of content searched for:

By type here I mean the multimedia content type of the results being returned. Bear in mind that what is actually getting indexed might be text (as in often the case for image search, video search etc).

Audio -

last.fm,

playlist,

pandoraVideo -

youtube,

metacafe,

vimeo Images -

Like.com Web pages -

Google,

Yahoo,

Bing,

Ask

2. By Specific Information Need/Purpose:A Search Engine that solves a specific information need better than a General Purpose Web Search Engine.

Health -

WebMDShopping-

Amazon,

Ebay,

thefindTravel -

ExpediaReal Estate -

TruliaEntertainment -

Youtube

3. Novel Ranking and/or Indexing Methods:By leveraging features that are not used by current General purpose Web search engines. This is hardest way to compete with the incumbent Search Engines. Startups need to overcome several disadvantages to be able to even set up a meaningful comparison with the big guys. Disadvantages like data (queries, clicks etc), index size, spam data etc.

Natural Language Search -

PowersetSemantic Search -

HakiaScalable Indexing-

CuilPersonalized Search -

KaltixReal Time Search -

Twitter,

Tweetnews,

Tweetmeme etc

Sometimes this can be in the context of vertical search engines also. For eg. for searching for restaurants using a feature like ratings might be useful which is not cleanly available to general purpose search engines but its a feature someone like

Yelp might exploit.

4. Searching content that is not crawlable by General Purpose search engines:Typically in these cases, the service containing the search engine generates its own data. For eg. Youtube, twitter, Facebook etc. But sometimes the data might be obtained via an API as in the case of the variety of Twitter Search Engines.

Videos -

YoutubeStatus Messages, link sharing -

Twitter,

FacebookData in charts & other parts of the Deep Web - Wolfram Alpha (some of the data seems to have been acquired at some cost)

Job Search -

Linkedin,

Hotjobs etc

5. Visualization:Innovating on the search result presentation front.

Grokker (Map View)

Searchme (Coverflow like search result presentation)

Clusty (Document clustering by topic)

Snap (Thumbnail previews)

Kosmix (Automatic information aggregation from multiple sources)

Google Squared Some conversational/dialogue interface based systems could also fall under this category.

6. Regionalization/Localization:

Regionalization/Localization could mean,

a.Better handling of the native language's character set (tokenization, stemming etc). The CJK languages (Chinese, Japanese, Korean) present unique challenges with word segmentation, named entity recognition etc.

b.Capturing any cultural biases

c.Blending in local & global content appropriately for search results. (This was my research project at Yahoo! Search. Will describe this problem in more detail in a subsequent post). For eg. for a query 'buying cars' issued by a UK user, we don't want to show listings of US cars. But if that same user queried for 'neural networks' we don't care if the result is a US website.

Crawling, indexing & ranking need some regionalization/localization and sometimes local search engines can challenge the larger search engines here.

China -

BaiduIndia -

Guruji Let me know if you see other forms of classfications and I'll update the post to reflect it. From a business perspective, I think there 4 main things to consider while building a new search engine.

A. How likely is to build a disruptive user experience? (Significantly better ranking, user experience etc. than the default Web Search Engine of choice. The delta is very likely to be much more than our first quess :))

B. How big will the impact be? (% of query stream impacted, $ value of impacted query stream etc)

C. How easy is to replicate? (Search is not sticky. A simple feature will get copied in no time and leave you in the cold.)

D. Accuracy of the binary query classifier the user would need to have(in her mind) to know when to use your search engine (for a general purpose search engine this is trivial but for other specific vertical/niche engines this is important). In English, this would be clarity of purpose.

Category 3. is definitely the playground where the big guys play and in my opinion the most exciting. Its where you are exposed to the

black swans. Of course reward & risk are generally proportional and this is also where the riskiest bets are made. My company,

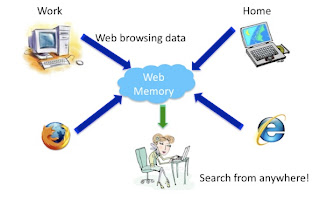

Infoaxe will be entering category 3 in the next 2 months or so. This is a stealth project so I can't talk more about it at this time. I'll post an update once we're live.

[Image courtesy: http://www.webdesignedge.net/our-blog/wp-content/uploads/2009/06/search-engines.jpg]